Apple Intelligence is also not great

by cthos

About 7 min

Update June 17th, 2024 - Ed Zitron has a pretty great take on this where in he argues that the ChatGPT integration is a mere footnote and it doesn't seem like Apple thinks it's going to be useful.

Well here we are again. Yet another giant company has decided to put Generative AI front and center, embedding it inside the operating system. I'm starting to think this is the most cursed timeline possible (not really, but the simulation is getting real weird).

I'm going to get to that, but first I want to take a minute and talk about that WWDC keynote, because it had some other things that I liked and a bunch more "AI/Machine Learning" than they actually mentioned.

The first hour of the keynote was good

Permalink to “The first hour of the keynote was good”Look, right out of the gate, Apple announced a ton of things for each one of their operating systems. They did the thing that Apple always does: Refine some feature something else has had for years and announce it like it was both their idea and revolutionary. They also did something I thought was smart: When a feature is powered by machine learning, they didn't mention that and just talked about what the feature could do. Brilliant! There's a lot of relevant, applicable things that ML can do for you that doesn't sway into the generative category.

These very handy features include:

Categorizing your emails into tabs, a feature Gmail has had since 2013. This is almost certainly using some kind of classifier model to do the work. It'll also likely be a bit of a hot mess (but that's okay).

Showing you relevant bits of trivia about the movie or show you're watching, a feature Amazon has had since 2018ish (Prime Video X-Ray)?

Taking 2d Images and making them into "spatial photos" (giving them depth) using an ML algorithm (I dunno if there's anything comparable to this one)

Shake your head while wearing headphones to decline a call from your Gam Gam (using an ML algorithm to classify a nod vs a shake), a feature some other buds did in 2021.

Tapping your fingers to do actions on your watch, which again is using a classifier model to understand what gesture you just made.

Sidebar, did anyone else catch the dig at Google Chrome in the Safari announcements? They basically said "Safari is a browser where private mode is actually private" - which is an amazing throwback to this revelation.

By not actually saying anything about ML in those announcements, you instead focus on how things actually make your life easier rather than eating up the current hype.

That calculator is awesome

Permalink to “That calculator is awesome”

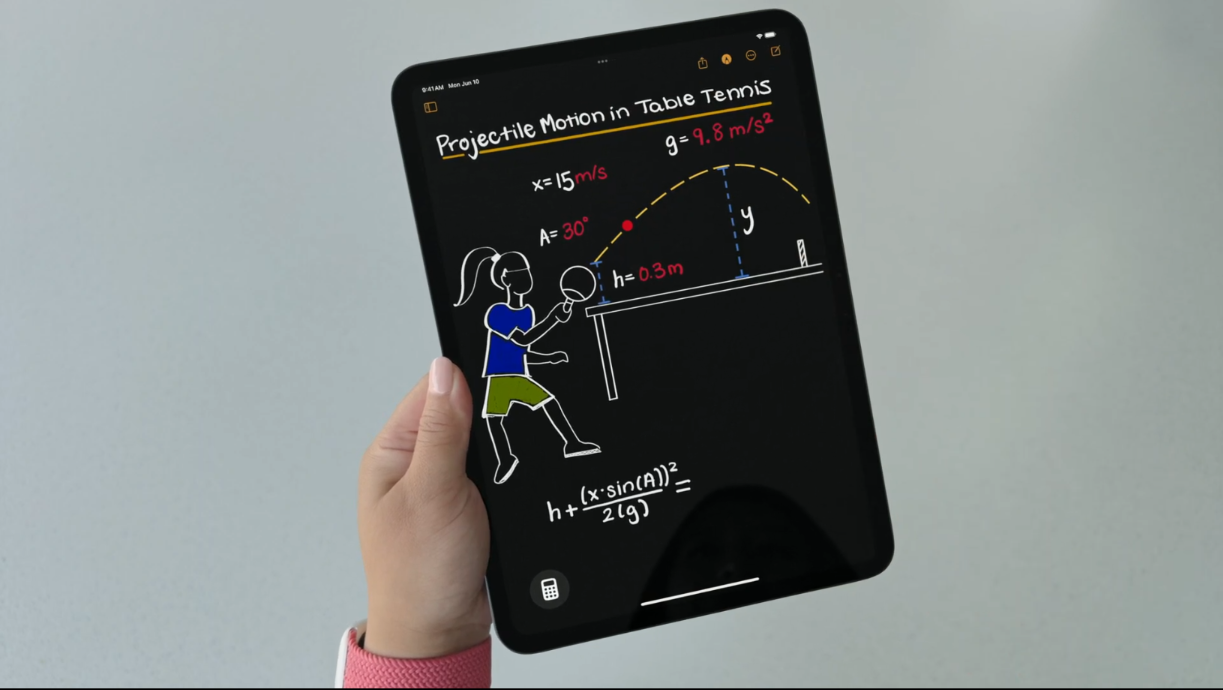

Hands down, the star of the show is the Apple Calculator App for iPadOS. Why the hell the calculator has never been available on an iPad before has been the subject of many a blog post and article.

But the absolute coolest thing that was announced was the handwriting scratchpad. Simply write your equations out, and the app will do the math for you. It has variables! It can do algebra and trigonometry! It'll add up the items in a list you just wrote down.

You know how it's doing that? Optical Character Recognition (OCR) which is another ML Algorithm.

Relatedly, that's also how it's doing the "your handwriting is bad and we're gonna make it look less bad but still recognizably like your handwriting".

Bottom Line

Permalink to “Bottom Line”I was vibing with the keynote for the majority of the presentation. Useful features, minimal risk, finally a calculator. I was a bit disappointed that they DIDN'T FIX STAGE MANAGER, but otherwise it was a good start.

And then Craig walked out to tell us all about ..... Apple Intelligence, immediately triggering my gag reflex.

Apple Intelligence is the same thing as Recall

Permalink to “Apple Intelligence is the same thing as Recall”...but from a company with a better track record on security.

First thing's first, I want to say that Apple did a good job on putting privacy and security first. It's clear that they want to position themselves as the "privacy alternative" to Microsoft, and they've done a reasonable job of that over the years. Not perfect, by any stretch, but reasonable. Keep that in mind as we have a little chat about this.

Apple Intelligence, according to Apple, is a bunch of models running primarily locally on your Apple device provided you've got a strong enough chip (more on that in a second). If the local models determine they can't do a task for you (unknown how it's making that decision, probably going to be on a per-feature basis), it'll farm that out to the cloud, but using "Private cloud compute". That's something Apple just cooked up and has a lot of info around how they plan to do it. They're even opening it up to 3rd party security researchers. Neat!

I'm just a security enthusiast, not a professional (though I do love a good HackFu), so I'm going to leave the "does this do what they say it does" to others. What I will mention is that Apple's reputation for security is miles better than Microsoft, so I suspect the general public is more inclined to believe them when they say something.

It's also going to do Recall-esque things across your entire device, because it has deep access to all the data on your device. It won't be taking screenshots every few seconds because it doesn't have to. It already has deep systems-level access to aggregate everything you're doing. They've been doing this for a while now, for example if you've seen someone send you a picture in iMessage you can also see that same picture showcased for you in Photos. They've been categorizing people, places, and things in your photos for a long while.

Now, because of the deep integration of all of those things, they can semantically search and gobble all of that up at once. Yay!

Look, Apple does have some goodwill left to give it the benefit of "we're not going to pillage this juicy training source and we pinkie promise it's more secure than Microsoft", but this is the same thing.

These local and cloud models will apparently do all sorts of great things:

Make Siri more useful! (maybe)

Summarize your emails!

Summarize and prioritize your boundless notifications!

Summarize your text messages!

Semantic search across your entire device (just like Recall!)

Semantic Search inside of videos! (Possibly getting the subject very wrong)

Write a bedtime story for your child!

Check your Grammar for you! (I'm not sure why you'd use an LLM for that as I've mentioned before)

- RIP Grammarly?

Make an Emoji of your friend, without their consent, and send it to them!

Make a custom emoji of whatever you want!

...Generate "art" locally, I guess?

Basically, exactly the same stuff that all the other LLM / Diffusion model companies want you to do smashed together with the idea behind Recall.

Let's take a moment and look at some of the examples they gave

Permalink to “Let's take a moment and look at some of the examples they gave”This, for me is one of the key things that illustrates just how "we're grasping for ideas" the LLM/Diffusion crowd is.

Enhance your notes with hallucinatory diffusion

Permalink to “Enhance your notes with hallucinatory diffusion”

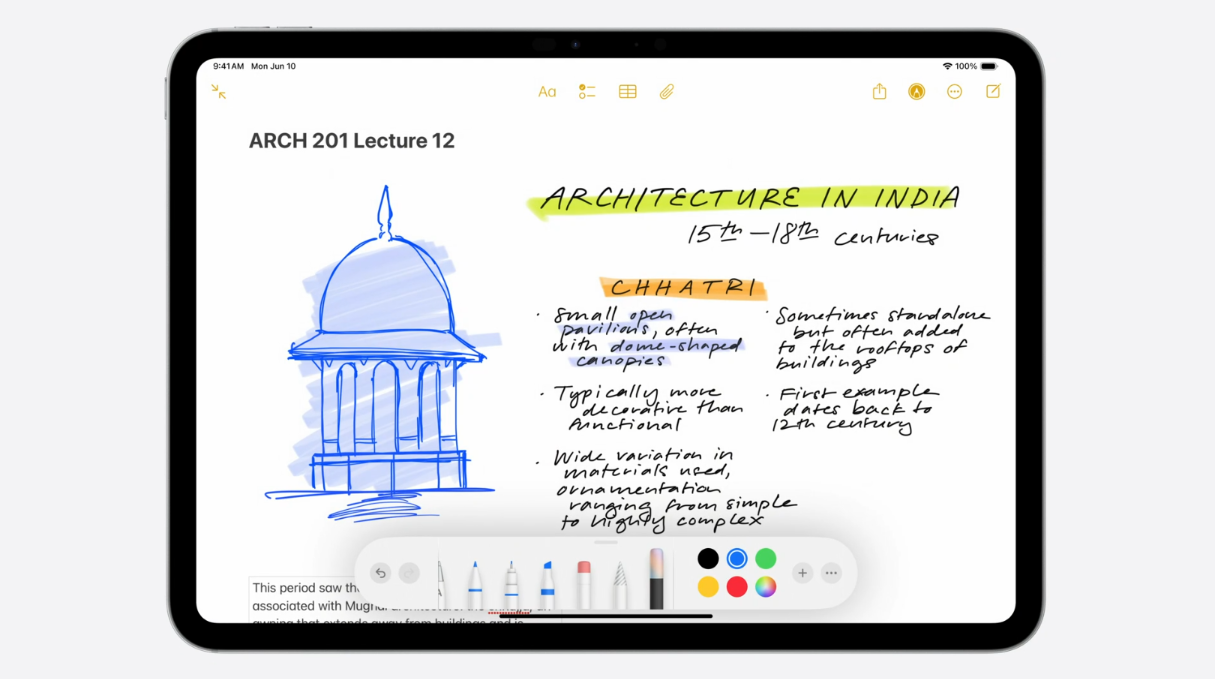

The one that really got me, and I thought was absolutely ridiculous was an example of a student learning about architecture. They'd drawn a fantastic sketch of a building that they were learning about. BUT WAIT! That image is just a sketch. What if we had a machine hallucinate a copy of it? The demo goes on to show how a generative model takes the sketch and makes it into a picture.

Who the hell wants to do that? First off, it was a great sketch, and didn't need to be "enhanced". Second, you're learning about something that presumably...exists. That there are real photos of. Why would you waste the electricity to make a brand new image, that may or may not look like what you wanted when you could...I don't know, find an actual photo of the thing. Wikipedia has one, even! I just... ugh.

It's one thing to want to generate an image of a fictional character that doesn't exist and a whole other thing to want to generate an image of things that absolutely do already exist.

Send your friends creepy pictures of them

Permalink to “Send your friends creepy pictures of them”I don't have a lot of energy to talk about this one, but if you thought memoji were weird, at least you had control over that. This time, because Apple Intelligence "knows your friends" you can now make a memoji for them whether they like it or not. Doing...who knows what? Can I type in whatever prompt I want?

I do not like this. Please do not send me any of these. I might block you.

Relatedly, you're going to be able to do contextual diffusion models right there to "really express what you're feeling". I honestly do not understand why anyone would want to do this. I don't get it. Please do not explain it to me. I don't want to know.

Anyhow, those are just two examples of "I don't think they've got a killer use case for this so we're going to guess at what people do with their phones". The real problem is the giant OpenAI shaped elephant in the room.

All the Privacy in the world stops when you send stuff to OpenAI

Permalink to “All the Privacy in the world stops when you send stuff to OpenAI”The other "major announcement" that Apple made at WWDC was their partnership with OpenAI.

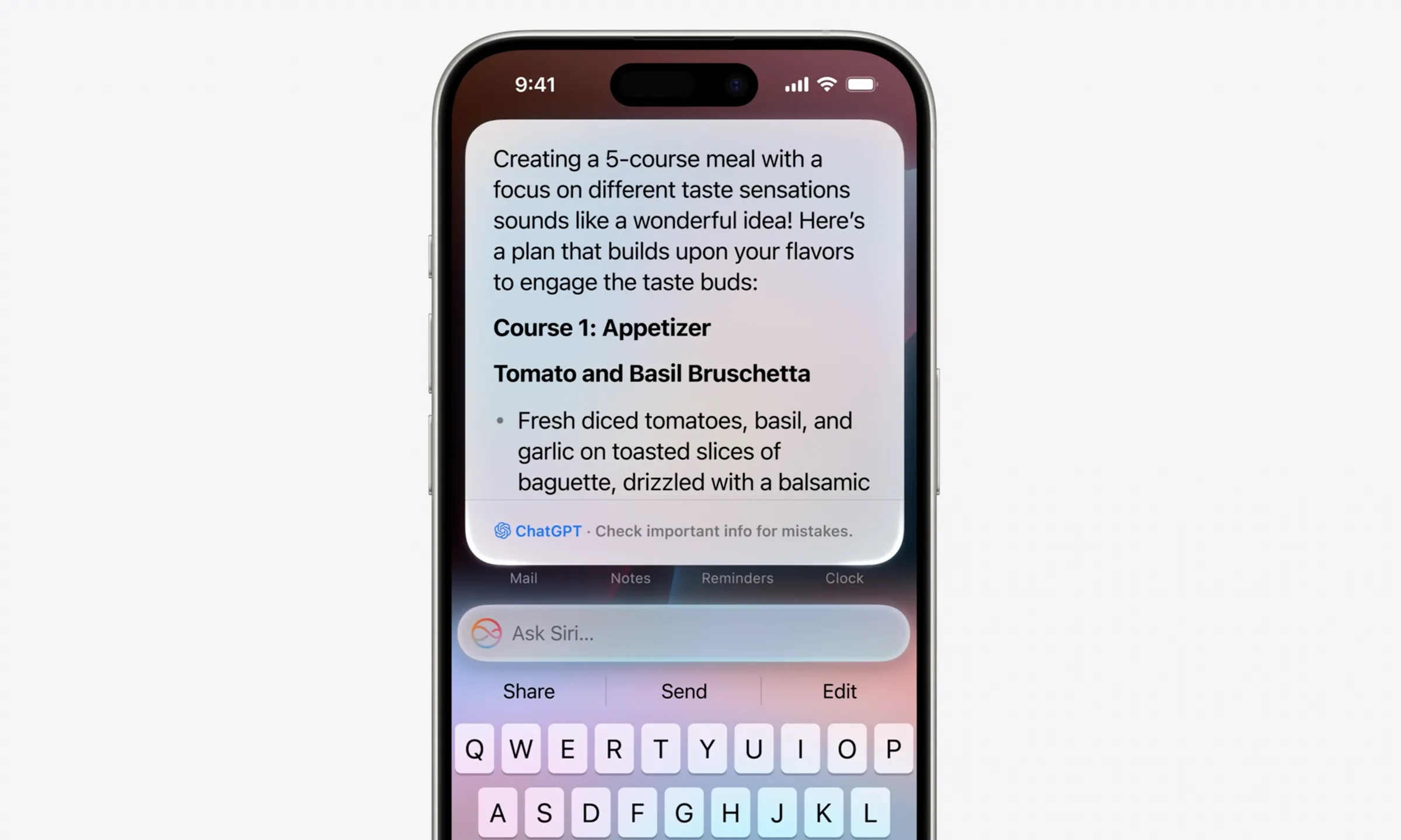

Somehow, which wasn't exactly clear during the keynote, if a local model determines that it can't do a thing for you - and the private cloud models can't either, it may prompt you to send your query to OpenAI. This might just be isolated to Siri, or it could be everywhere? I'm not sure.

It's not exactly a "groundbreaking" integration. It's basically the same thing as any other OpenAI API wrapper, but at the OS level (Yikes).

The major problem with this is you may trust Apple not to leak your data everywhere, but you sure as hell shouldn't extend the same trust to OpenAI. Apple assures us that OpenAI isn't retaining that data but OpenAI has been caught lying repeatedly. You cannot trust OpenAI to do what it says it's going to, and shipping an integration to OpenAI right in Apple operating systems is a major breach of trust.

I don't like this one bit.

I hope that there's a way to disable it.

Also, no talk of hallucinations

Permalink to “Also, no talk of hallucinations”

Notably absent from Apple's announcements is "what happens if the thing hallucinates". All we got was a single line at the bottom of a screenshot saying to "Check important info for mistakes". Awesome. We're going to have LLMs summarize everything on your device, and we're not going to mention that it's liable to get those things wrong. Cool.

I expect, especially with locally running models, you're going to get more hallucinations, not less, but Apple seems to think the opposite. Guess we'll find out.

What I'm going to do

Permalink to “What I'm going to do”Well, here's a silver lining. I have an iPhone 14 which will not support Apple Intelligence (excellent). So I guess I'm just never going to buy a newer iPhone? My iPad and macbook do support these features so... I'm going to wait until I hear about how much of it I can opt out of before I update to the next version of the OS.

It's possible I'll be sitting on an old OS forever.

Or I'll go live in the woods I guess. I do not want any of this, and it's being foisted upon me because our tech overlords think it's what investors want. The enshittifcation will continue until morale improves.